Riemannian Geometry, also known as elliptical geometry, is the geometry of the surface of a sphere. It replaces Euclid's Parallel Postulate with, "Through any point in the plane, there exists no line parallel to a given line." A line in this geometry is a great circle. The sum of the angles of a triangle in Riemannian Geometry is > 180°.

Friedman Equation What is p density.

What are the three models of geometry? k=-1, K=0, k+1

Negative curvature

Omega=the actual density to the critical density

If we

triangulate Omega, the universe in which we are in, Omega m(mass)+ Omega(a vacuum), what position geometrically, would our universe hold from the coordinates given?

The basic understanding is the understanding of the evolution of Euclidean geometries toward the revelation of a dynamical understanding in the continued expression of that geometry toward a non Euclidean freedom within context of the universe..

Maybe one should look for "a location" and then proceed from there?

TWO UNIVERSES of different dimension and obeying disparate physical laws are rendered completely equivalent by the holographic principle. Theorists have demonstrated this principle mathematically for a specific type of five-dimensional spacetime ("anti–de Sitter") and its four-dimensional boundary. In effect, the 5-D universe is recorded like a hologram on the 4-D surface at its periphery. Superstring theory rules in the 5-D spacetime, but a so-called conformal field theory of point particles operates on the 4-D hologram A black hole in the 5-D spacetime is equivalent to hot radiation on the hologram--for example, the hole and the radiation have the same entropy even though the physical origin of the entropy is completely different for each case. Although these two descriptions of the universe seem utterly unalike, no experiment could distinguish between them, even in principle. by Jacob D. Bekenstein

Consider any physical system, made of anything at all- let us call it, The Thing.

We require only that The Thing can be enclosed within a finite

boundary, which we shall call the Screen(Figure39). We would like to

know as much as possible about The Thing. But we cannot touch it

directly-we are restricted to making measurements of it on The Screen.

We may send any kind of radiation we like through The Screen, and record

what ever changes result The Screen. The Bekenstein bound says that

there is a general limit to how many yes/no questions we can answer

about The Thing by making observations through The Screen that surrounds

it. The number must be less then one quarter the area of The Screen, in

Planck units. What if we ask more questions? The principle tells us

that either of two things must happen. Either the area of the screen

will increase, as a result of doing an experiment that ask questions

beyond the limit; or the experiments we do that go beyond the limit will

erase or invalidate, the answers to some of the previous questions. At

no time can we know more about The thing than the limit, imposed by the

area of the Screen. Page 171 and 172 0f, Three Roads to Quantum Gravity, by Lee Smolin

Holography encodes the information in a region of space onto a surface one dimension lower. It sees to be the property of gravity, as is shown by the fact that the area of th event horizon measures the number of internal states of a blackhole, holography would be a one-to-one correspondence between states in our four dimensional world and states in higher dimensions. From a positivist viewpoint, one cannot distinguish which description is more fundamental.Pg 198, The Universe in Nutshell, by Stephen Hawking

The problem is the further you go in terms of particle reductionism you meet a problem with discreteness in terms of "continuity of expression." I know what to call it and it is of value in science investigation. Which means the paradigmatic values with which one is govern by using discreteness in terms of lets say computational values might suffer?

While one might think that it would be easy to accept a foundational approach toward some computational view of reality that view suffers under the plight of what exists in terms of information out there?

If such a view of computational validation works in terms of viewing "a second life" then how would you approach the resolvability of mathematical functions that exist in abstractness and are applied to the nature of our expressions? Why has computations not solved the mathematical hypothesis of lets say Riemann?

Joel:

I wonder if this is related to the issue of

"non-computability" of the human mind, put forward by Roger Penrose. Is

this why we humans can do mathematics whereas a computer cannot ?

There are some interesting quotes here in following article that come real close to what is implied by that difference.

You

raised a question that has always been a troubling one for me. On a

general level how could such views have been arrived at that would allow

one to access such a mathematical world?

The idea being that to

get to the truth one had to turn inside and find the very roots of all

thought in some geometrical form. The closer to that truth, the very

understanding and schematics drawn in that form. Not all can say the

search for such truth resides within? Why the need for such geometry in

relativism? Riemann Hypothesis as a function of reality? Why has a

computer not solved it?

My views were always general as to what

we may have hoped to create in some kind of machine or mechanism. I just

couldn't see this functionality in relation to the human brain as 1's

and 0's.

I might say it never occurred to me the depth that it

has occupied Penrose's Mind. The start of your question and the related

perspectives of the authors revealed in the

following discourse have raised a wide impact of views that seek to exemplify what is new to me as to what you are asking.

Yet

the real world has made major advancements in terms of digital physics

and hyper physics. Has any of this touched the the nature of

consciousness. This would then lead to Penrose angle in relation to what

consciousness is capable of and what a machine is capable of. That

would be my guess.

Can one gleam the understanding of what exists all around them without the knowledge of how one can look at what is available to us in terms of our observations? You have to be able to use "distance" in order to arrive at the conclusion about the current state in terms of the geometry in order to understand how such perceptions are relevant characterization toward explaining the space and what may drive the universe in terms of it's expression.

So there are many on going experiments that help to further question that perspective test it and validate it.

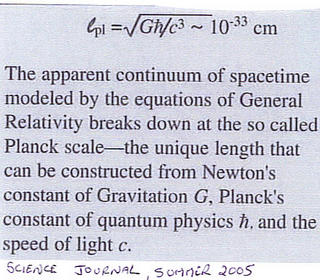

The problem is that at a certain length things break down. How can consciousness then be imparted to what is geometrically inherent in our expressions of the reality in which we live? Topology? Continuity of expression?

Paul- Where Do We Come From? What Are We? Where Are We Going?

"On the right (Where do we come from?), we see the baby, and three young women - those who are closest to that eternal mystery. In the center, Gauguin meditates on what we are. Here are two women, talking about destiny (or so he described them), a man looking puzzled and half-aggressive, and in the middle, a youth plucking the fruit of experience. This has nothing to do, I feel sure, with the Garden of Eden; it is humanity's innocent and natural desire to live and to search for more life. A child eats the fruit, overlooked by the remote presence of an idol - emblem of our need for the spiritual. There are women (one mysteriously curled up into a shell), and there are animals with whom we share the world: a goat, a cat, and kittens. In the final section (Where are we going?), a beautiful young woman broods, and an old woman prepares to die. Her pallor and gray hair tell us so, but the message is underscored by the presence of a strange white bird. I once described it as "a mutated puffin," and I do not think I can do better. It is Gauguin's symbol of the afterlife, of the unknown (just as the dog, on the far right, is his symbol of himself).

One then ponders how such a universe is part of something much greater in expression that one might want to see how this continuity of expression is portrayed in our universe. How such a balance is struck to maintain this feature as a geometrical understanding?

You have to go outside the box. Cosmologists are limited by this perspective. Others venture well beyond the constrains applied by them. About a beginning and an end and all that in between. Birth and death are set within the greater expression of such a universe, on and on.

See:

- What is Happening at the Singularity?

-

Space and Time: Einstein and Beyond

_film_poster.jpg)