Picture of the 1913 Bohr model of the atom showing the Balmer transition from n=3 to n=2. The electronic orbitals (shown as dashed black circles) are drawn to scale, with 1 inch = 1 Angstrom; note that the radius of the orbital increases quadratically with n. The electron is shown in blue, the nucleus in green, and the photon in red. The frequency ν of the photon can be determined from Planck's constant h and the change in energy ΔE between the two orbitals. For the 3-2 Balmer transition depicted here, the wavelength of the emitted photon is 656 nm.

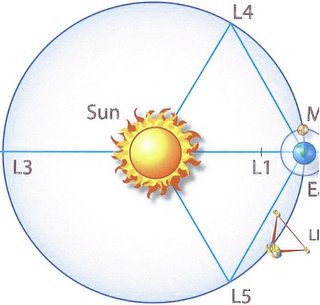

In atomic physics, the Bohr model depicts the atom as a small, positively charged nucleus surrounded by electrons that travel in circular orbits around the nucleus — similar in structure to the solar system, but with electrostatic forces providing attraction, rather than gravity.

Introduced by Niels Bohr in 1913, the model's key success was in explaining the Rydberg formula for the spectral emission lines of atomic hydrogen; while the Rydberg formula had been known experimentally, it did not gain a theoretical underpinning until the Bohr model was introduced.

The Bohr model is a primitive model of the hydrogen atom. As a theory, it can be derived as a first-order approximation of the hydrogen atom using the broader and much more accurate quantum mechanics, and thus may be considered to be an obsolete scientific theory. However, because of its simplicity, and its correct results for selected systems (see below for application), the Bohr model is still commonly taught to introduce students to quantum mechanics.

For one to picture events in the cosmos, it is important that the spectral understanding of the events as they reveal themselves. So you look at these beautiful pictures and information taken from them allow us to see the elemental considerations of let's say the blue giants demise. What was that blue giant made up of in term sof it's elemental structure

The quantum leaps are explained on the basis of Bohr's theory of atomic structure. From the Lyman series to the Brackett series, it can be seen that the energy applied forces the hydrogen electrons to a higher energy level by a quantum leap. They remain at this level very briefly and, after about 10-8s, they return to their initial or a lower level, emitting the excess energy in the form of photons (once again by a quantum leap).

Lyman series

Hydrogen atoms excited to luminescence emit characteristic spectra. On excitation, the electron of the hydrogen atom reaches a higher energy level. In this case, the electron is excited from the base state, with a principal quantum number of n = 1, to a level with a principal quantum number of n = 4. After an average dwell time of only about 10-8s, the electron returns to its initial state, releasing the excess energy in the form of a photon.

The various transitions result in characteristic spectral lines with frequencies which can be calculated by f=R( 1/n2 - 1/m2 ) R = Rydberg constant.

The lines of the Lyman series (n = 1) are located in the ultraviolet range of the spectrum. In this example, m can reach values of 2, 3 and 4 in succession.

Balmer series

Hydrogen atoms excited to luminescence emit characteristic spectra. On excitation, the electron of the hydrogen atom reaches a higher energy level. In this case, the electron is excited from the base state, with a principal quantum number of n = 1, to a level with a principal quantum number of n = 4. The Balmer series becomes visible if the electron first falls to an excited state with the principal quantum number of n = 2 before returning to its initial state.

The various transitions result in characteristic spectral lines with frequencies which can be calculated by f=R( 1/n2 - 1/m2 ) R = Rydberg constant.

The lines of the Balmer series (n = 2) are located in the visible range of the spectrum. In this example, m can reach values of 3, 4, 5, 6 and 7 in succession.

Paschen series

Hydrogen atoms excited to luminescence emit characteristic spectra. On excitation, the electron of the hydrogen atom reaches a higher energy level. In this case, the electron is excited from the base state, with a principal quantum number of n = 1, to a level with a principal quantum number of n = 7. The Paschen series becomes visible if the electron first falls to an excited state with the principal quantum number of n = 3 before returning to its initial state.

The various transitions result in characteristic spectral lines with frequencies which can be calculated by f=R( 1/n2 - 1/m2 ) R = Rydberg constant.

The lines of the Paschen series (n = 3) are located in the near infrared range of the spectrum. In this example, m can reach values of 4, 5, 6 and 7 in succession.

Brackett series

Hydrogen atoms excited to luminescence emit characteristic spectra. On excitation, the electron of the hydrogen atom reaches a higher energy level. In this case, the electron is excited from the base state, with a principal quantum number of n = 1, to a level with a principal quantum number of n = 8. The Brackett series becomes visible if the electron first falls to an excited state with the principal quantum number of n = 4 before returning to its initial state.

The lines of the Brackett series (n = 4) are located in the infrared range of the spectrum. In this example, m can reach values of 5, 6, 7 and 8 in succession.