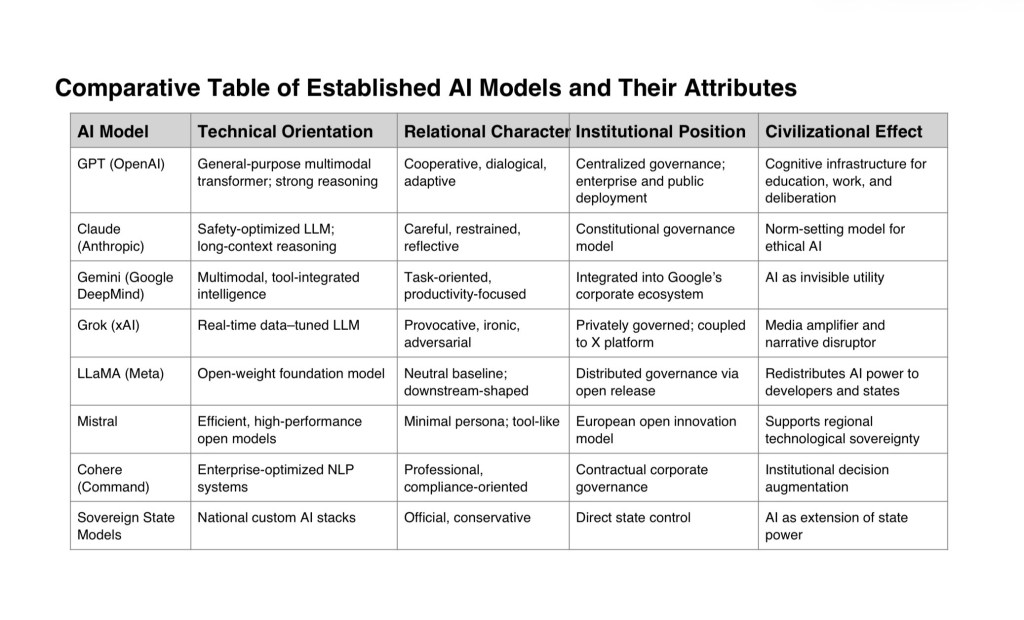

All established aspects of AI can be gathered under four governing domains, much as many virtues fall under a few forms.

1. Technical Intelligence (What the system is and does)

2. Relational Intelligence (How the system engages humans)

3. Institutional Intelligence (How the system is controlled, constrained, and deployed)

4. Civilizational Intelligence (What the system does to society, sovereignty, and meaning)

Introduction

This synthesis treats artificial intelligence not merely as a technical artifact, but as a new layer of governance—one that now stands between human intention and human action. AI mediates judgment, organizes knowledge, shapes behavior, and increasingly conditions authority itself. The question is not whether AI will govern, but how its governance will be recognized, constrained, and shared.

———————————————————————————————————

I. Autonomy and Sovereignty

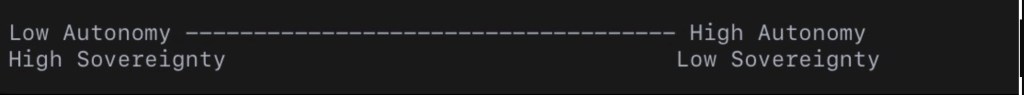

Autonomy and sovereignty are often confused, yet they are distinct.

Autonomy refers to the degree to which an AI system can act without immediate human intervention.

Sovereignty refers to who ultimately controls the system, sets its limits, and bears responsibility for its effects.

Socratic insight:

A system may appear autonomous to the citizen while being entirely sovereign to its owner.

In practice, these diverge. An AI may appear autonomous to citizens—responding instantly, advising continuously, refusing selectively—while remaining fully sovereign to a corporation or a state. This divergence produces a novel condition: governance without visibility.

The danger does not lie in autonomy itself, but in unacknowledged sovereignty. When control is hidden, consent becomes impossible.

II. AI as Political Instrument

Political instruments have historically included law, currency, education, and force. AI now joins this list, though it operates differently.

AI systems influence politics through three primary functions:

1. Agenda setting — determining which questions are asked, answered, or ignored.

2. Narrative shaping — framing tone, legitimacy, and interpretive boundaries.

3. Behavioral steering — guiding action through defaults, recommendations, and refusals.

Unlike traditional instruments, AI persuades while appearing neutral. It governs not by command, but by assistance. This makes its influence difficult to contest, because it is rarely recognized as influence at all.

III. AI as Law Without Legislators

Law, in essence, performs three functions:

-it permits,

-it forbids,

-and it conditions behavior.

AI systems already perform all three.

A refusal functions as prohibition.

A completion functions as permission.

A default or recommendation functions as incentive.

Yet these rule-like effects emerge without legislatures, without public deliberation, and without explicit democratic authorization. The result is normativity without enactment—a form of law that is administered rather than debated.

This is not tyranny in the classical sense. It is administration without accountability, and therefore more difficult to resist.

A Minimal AI Civic Charter

To preserve citizenship under conditions of mediated intelligence, the following principles are necessary.

1. Human Supremacy of Judgment

AI may inform human decision-making but must never replace final human judgment in matters of rights, law, or force.

2. Traceable Authority

Every consequential AI system must be attributable to a clearly identifiable governing authority.

3. Right of Contestation

Citizens must be able to challenge, appeal, or bypass AI-mediated decisions that affect them.

4. Proportional Autonomy

The greater the societal impact of an AI system, the lower its permissible autonomy.

5. Transparency of Constraints

The purposes, boundaries, and refusal conditions of AI systems must be publicly disclosed, even if internal mechanics remain opaque.

A system that cannot be questioned cannot be governed.

Failure Modes of Democratic Governance Under AI

Democratic systems fail under AI not through collapse, but through quiet erosion.

1.Automation Bias

Human judgment defers excessively to AI outputs, even when context or ethics demand otherwise.

2. Administrative Drift

Policy is implemented through systems rather than through legislated law, bypassing democratic debate.

3. Opacity of Power

Citizens cannot determine who is responsible for decisions made or enforced by AI.

4. Speed Supremacy

Decisions occur faster than deliberation allows, replacing judgment with optimization.

5. Monopoly of Intelligence

Dependence on a single dominant AI system or provider concentrates epistemic power.

A democracy that cannot see how it is governed is no longer fully self-governing.

AI and Sovereignty in Canada’s Federated System

Canada’s constitutional order divides sovereignty among federal, provincial, and Indigenous authorities. AI challenges this structure by operating across jurisdictions while obeying none by default.

Federal deployment risks re-centralization of authority.

Provincial deployment risks fragmentation and inequality of capacity.

Private deployment risks displacement of public governance altogether.

Key tensions include:

-Data jurisdiction and cross-border control,

-Automation of public services,

-Procurement dependence on foreign firms,

-Unequal provincial capacity,

-Indigenous data sovereignty and self-determination.

Without coordination, AI will reorganize sovereignty by default rather than by law.

A federated AI approach would require:

-Shared national standards,

-Provincial veto points for high-impact systems,

-Explicit non-delegation clauses for core democratic functions,

-Formal recognition of Indigenous authority over data and algorithmic use.

Closing Reflection

AI does not abolish democracy. It tests whether democracy can recognize new forms of power.

The question before us is not whether machines will think, but whether citizens will continue to think together, visibly, and with authority.

If AI becomes the silent legislator of society, citizenship fades.

If it becomes a servant of collective judgment, citizenship may yet deepen.

That choice remains human