Illustration: Sandbox Studio

The first round of physics

Nine proposals are under consideration for the initial suite of physics experiments at DUSEL, and scientists have received $21 million in NSF funding to refine them. The proposals cover four areas of research:

In addition, scientists propose to build a generic underground facility (FAARM) that will monitor the mine's naturally occurring radioactivity, which can interfere with the search for dark matter. The facility also would measure particle emissions from various materials, and help develop and refine technologies for future underground physics experiments.

- What is the nature of dark matter? (Proposals for LZ3, COUPP, GEODM, and MAX)

- Are neutrinos their own antiparticles? (Majorana, EXO)

- How do stars create the heavy elements? (DIANA)

- What role did neutrinos play in the evolution of the universe? (LBNE)

But why are there four separate proposals for how to search for dark matter? Not knowing the nature of dark-matter particles and their interactions with ordinary matter, scientists would like to use a variety of detector materials to look for the particles and study their interactions with atoms of different sizes. The use of different technologies would also provide an independent cross check of the experimental results.

"We strongly feel we need two or more experiments," says Bernard Sadoulet of UC Berkeley, an expert on dark-matter searches. "If money were not an issue, you would build at least three experiments."

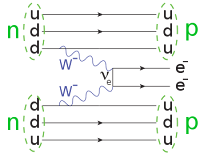

The largest experiment intended for DUSEL is the Long-Baseline Neutrino Experiment (see graphic), a project that involves both the DOE and NSF. Scientists would use the LBNE to explore whether neutrinos break one of the most fundamental laws of physics: the symmetry between matter and antimatter. In 1980, James Cronin and Val Fitch received the Nobel Prize for the observation that quarks can violate this symmetry. But the effect is too small to explain the dominance of matter over antimatter in our universe. Neutrinos might be the answer.

The LBNE scientists would generate a high-intensity neutrino beam at DOE's Fermi National Accelerator Laboratory, 800 miles east of Homestake, and aim it straight through the Earth at two or more enormous neutrino detectors in the DUSEL mine, each containing the equivalent of 100,000 tons of water.

Studies have shown that the rock at the 4850-foot level of the mine would support the safe construction of these caverns. In January, the LBNE experiment received first-stage approval, also known as Mission Need, from the DOE.

Lesko and his team now are combining all engineering studies and science proposals into an overall proposal for review.

"By the end of this summer, we hope to complete a preliminary design of the DUSEL facility and then integrate it with a generic suite of experiments," Lesko says. "While formal selection of the experiments will not have been made by that time, we know enough about them now that we can move forward with the preliminary design. The experiments themselves will be selected through a peer-review process, as is common in the NSF."

If all goes well, Lesko says, scientists and engineers could break ground on the major DUSEL excavations in 2013, marking the start of a new era for deep underground research in the United States. SEE:Big Plans for Deep Science

See Also: